Improve Operational Excellence With Advanced Analytics

At a time when markets are unsettled, profit margins are slim and all eyes are on corporate sustainability, chemical manufacturers are embracing new operating strategies. Besides traditional spending for equipment or process changes to improve outcomes, many companies now also are investing in data analytics and associated skills, in particular advanced analytics.

These investments enable chemical manufacturers to transcend data silos so engineers and other subject matter experts (SMEs) quickly can analyze data from legacy process historians, relational databases and new Industrial Internet of Things (IIoT) sensors. Cloud-based advanced analytics applications are a key part of this transformation, addressing the issues of time to return on investment, multi-source data access and distributing insights across the organization.

Thanks to self-service analytics, frontline SMEs closest to the assets and processes, instead of spending hours wrangling and aligning data, can use the time to uncover process, quality and environmental improvements. Cloud-based software accelerates the enterprise-wide deployment of these improvements by simplifying collaboration between information technology (IT) and operational technology (OT) leadership. The result is that early adopters of these innovations are moving ahead of their competition, fostering prosperity in a tough market environment.

Defining Data Strategy

When crude oil was trading near $100/bbl, chemical makers primarily relied upon capital improvement projects to increase production capacity. This spending to boost capacity has resulted in a chemicals market that is saturated in many sectors, driving down selling prices and forcing companies to rethink how they can eek out an extra $0.05/lb profit margin.

With traditional value-creation strategies no longer generating the return they once did, chemical manufacturers are adopting alternative approaches to drive operational excellence. Many companies now are placing greater emphasis on extracting more value from data through process modeling, optimization, key performance indicator (KPI) calculation and loss tracking.

Making the most of analytics requires strategy and enterprise alignment from the start. It demands answers to questions such as:

• What data currently are being stored?

• Who are the primary users of those data and where are these users located?

• What type of calculations do the data go into?

• What frequency of data is required for the analysis?

• Is analysis based on historical, near-real-time or forecasted data?

• What data sources are being used together to create insights?

With an understanding for how and where to store data, the focus shifts to what types of analyses to prioritize. This could mean defining a specific set of KPIs to evaluate, periodically report and benchmark across production sites. It could involve standardizing the analysis and monitoring of key process equipment (pumps, compressors, valves, etc.) across the organization, or it could mean incorporating machine learning, artificial intelligence or digital twins to solve predictive maintenance challenges.

One of the most effective ways to crank out analytics at scale is to empower existing SMEs with user-friendly self-service advanced analytics tools. The SMEs, through their process knowledge and awareness, can put the data into proper context, eliminating the contextualization feedback loop often experienced with siloed data science groups in organizations. Chemical manufacturers are embracing this strategy with the understanding that maximum value is created by combining advanced analytics software with empowered employees.

Overcoming Common Obstacles

Despite technology advances spurred by cloud-based software delivery, data access still lingers as one of the most significant barriers to engineering productivity. Live connections to process and contextual data sources are critical for near-real-time analytics and for acting on the results — but these connections frequently are not available. As Figure 1 highlights, traditional, often spreadsheet-based, methods of aggregating data from multiple sources or over long periods of time commonly pose a number of problems. Cloud-based advanced analytics addresses these and other issues to increase productivity and speed time to insight. The combined data are indexed on demand by the SME and displayed in an interactive point-and-click environment, making it easy to perform calculations and get immediate visual feedback of the results.

A user-friendly environment is imperative to make advanced analytics accessible to SMEs, many of whom lack a programming background. Another key factor is the ability to display the visualizations and results of various analyses in a comprehensive dashboard and reporting tool. Reports built in browser-based advanced analytics applications enable easy access to insights and click-through functionality for further investigation.

With the data access and computation challenges addressed, the choke point shifts downstream to how an organization operationalizes the insights gleaned from analytics. Converting an analytics insight into a physical action taken by frontline process personnel requires an advanced network of information flow among systems. The cloud and related services empower chemical manufacturers to do this in near-real-time at scale.

Figure 1. Traditional approach to analyzing process engineering data using a spreadsheet poses a variety of issues.

Delivering Value

Once the proper foundation is in place, a chemical maker can achieve significant improvements. Let’s look at three actual examples.

Product quality. Critical measurements like yield, composition and viscosity often are difficult to monitor online; so, instead, samples are taken and sent to a laboratory. An unexpected result from the laboratory analysis initiates a feedback loop, manipulating upstream parameters to achieve the desired downstream quality result. It’s important for manufacturers to understand which independent variables in the process most directly link to the desired outcome and to what magnitude because this information is needed to maximize product quality, yield and other parameters.

One large-volume chemical maker pulled the relevant process variables into a Seeq Workbench display, and identified time periods comprising both normal and abnormal operation. Using a correlation matrix algorithm built in Seeq Data Lab, it determined the manipulated variables with the largest impact on the measured quality variable. After removing outliers and downtime data from relevant signals, process dynamics were accounted for by delaying upstream signals by the offset of maximum correlation with the target signal. A predictive model of product quality was built and deployed to control room operators via an auto-updating dashboard.

The deployment of the dashboard has given those closest to the manufacturing process a better understanding of the product quality of in-process materials. Based on the prediction of future laboratory sample data, operators now make proactive adjustments to fine-tune the measured variable to align with the targets. This mechanism of proactive product quality control is saving the company $1–5 million/yr in product downgrade losses.

Sustainability and environmental stewardship. Chemical manufacturers are looking for ways to improve sustainability and drive toward carbon-neutral low-emissions operations. One of the key capabilities needed to reduce emissions is developing an understanding of the quantity of emissions being released, and then tracking those cumulative volumes against annual permit limits. Accurate reporting becomes more challenging when vent stack analyzers peg out at their limits, necessitating use of estimates. The estimation process is complex and highly manual, requiring engineering support at the time of an exceedance.

So, a chemical company used Seeq Workbench to create an automated model of a pollutant detector’s behavior during the time periods when its range was exceeded. Model development required the use of capsules to isolate the data set for the time periods before, during and after a detector range exceedance occurred. Regression models were fit to the data before and after the exceedance, and then extrapolated forward and backward to generate a continuous modeled signal; this is used to calculate the maximum concentration of pollutant. Scorecards provide a quick summary view of maximum concentration and total pollutant released during an exceedance event.

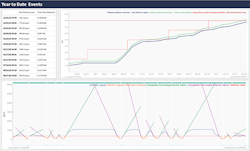

Figure 2. Auto-updating report shows year-to-date events as well as cumulative emissions against permit limits.

The visualizations developed were compiled into a single auto-updating report displaying data for the most recent exceedance event alongside visualizations tracking year-to-date progression toward permit limits (Figure 2). Retrospective application of the model revealed pollutant volumes likely were underreported in previous years; so, the company decided to make proactive process adjustments when it became clear that the current year was on a similar trajectory.

Worldwide roll-out via the cloud. Global chemical manufacturer Covestro, Leverkusen, Germany, launched a digitization initiative called process data analysis and visualization (or ProDAVis) for process monitoring. The aim is to provide employees at all its sites worldwide with process data access and analytics tools to achieve digital transformation.

One of Covestro’s key objectives was to achieve sustainable solutions, not short-term quick fixes. This meant that OT and IT leadership had to create a shared vision that didn’t require ripping and replacing the existing operational data infrastructure — but, rather, building on and evolving that infrastructure to gain greater value from their data. An essential element was providing cloud-based analytics tools that each employee could use to drive both immediate and longer-term operational improvements. This approach accelerated Covestro’s time to value by leveraging what already was in place while including its employees in the technology selection and usage, and by using the cloud to scale-up more quickly.

Best Practices And Potential Pitfalls

For chemical manufacturers, the fastest path to value is starting with use-cases and data that already are accessible, instead of leading with technology and then searching for a problem to solve. Common use-cases that self-service advanced analytics can quickly address are batch cycle time, golden batch, energy consumption and process monitoring.

To move ahead quickly, many companies are choosing software-as-a-service applications that offer rapid deployment in the cloud (sometimes within hours) and include secure connections to data — enabling users to begin work quickly. A common mistake at this juncture is to first move all data to one location, often the cloud, before beginning any work. This delays implementation and often results in abandonment of projects due to long periods between investment and results.

As mentioned earlier, it’s important to put employee knowledge to work and ensure that SMEs are at the heart of any analytics program or investments. SMEs understand the industrial process, data and context best. Choosing “black box” machine learning or analytics tools not only precludes capturing the insights of these experts but also can result in a lack of SME engagement. Easy-to-use and flexible analytics tools create new opportunities to gain value by marrying the best of people, process and technology.

Finally, you must begin with the end in mind. Adopting operational analytics across the enterprise eventually will require the global scale and reach of the cloud. However, this doesn’t mean you can’t do work until all data are in the cloud. Choosing cloud-based tools that can access both cloud and on-premise data sources ensures insights from data can be readily generated, starting with one employee and scaling out to thousands.

Enterprise Scale-Out With The Cloud

As chemical manufacturers achieve gains from continuous operational improvements generated from the insights created by their employees, the path forward is to extend the use of analytics across more assets and plants, and, eventually, to global operations. This requires use of the cloud.

Cloud-based analytics programs received a large share of IT attention and budget about a decade ago with the emergence of big data technologies. Many industries, from financial services to retail to healthcare, invested to harness the cloud for data collection, data storage, analyses and machine-learning tools to help them solve pressing business problems. The first wave of investment focused on using the cloud to store large volumes of data across the business value chain, including customer, financial, enterprise resource planning and business data.

Bringing access to disparate data sets together across different departments within a corporation provided 360° views of business performance. The emergence of self-service business intelligence (BI) applications connected to these cloud databases — using tools like Tableau, Microsoft Power BI or Spotfire — enabled business users to create analyses without learning to code.

This first generation of big data advances largely ignored operational data. Most manufacturers have generated and collected operational data for decades — and stored the information in supervisory control and data acquisition systems and plant-based historians. However, making these mission-critical data available to cloud-based databases or BI applications was challenging for several reasons.

The design of protocols for programmable logic controllers and industrial machines often didn’t have the internet in mind, and cybersecurity is a concern for transmitting operational data to the public cloud. Most operational data are time-series data that create inherent challenges for BI applications as these are purpose-built for business data, which most commonly are relational data.

Today, the big data landscape is much different because corporations now want to include operations data in their enterprise digital strategies. The emergence of industrial data standards like OPC and machine-data message brokers like message queuing telemetry transport are enabling improved connectivity to new cloud-based databases that are purpose-built for time-series and IIoT data. Additionally, advanced analytics now can be deployed in the cloud, providing SMEs with easy access to operational data whether they are working in the plant, in the field, in a remote operations center or from a home office.

The cloud accelerates these enterprise roll-outs by enabling analysis of massive amounts of data at ever faster speeds, with almost limitless computational power at hand. A corporation can take an analysis created by a single engineer at one plant for one asset or one batch and quickly share this insight across the peer group to scale-out to thousands of assets and batches. Specifically, the cloud is well suited for applying machine learning to generate new insights and predictions that simply aren’t possible when data are siloed, and users are limited to the constrained computing resources in their company’s data centers.

ALLISON BUENEMANN is a senior analytics engineer with Seeq Corp., Seattle.

MEGAN BUNTAIN is the director of cloud partnerships at Seeq. Email them at [email protected] and [email protected].