Merck Writes Prescription For Digital Transformation

Digital transformation offers the potential to provide manufacturing companies with a path to future success. But first, those companies must establish both a digital-transformation-ready culture and specific strategies to align these initiatives with business goals grounded in operational realities.

In a recent presentation, Eugene Tung, executive director, manufacturing IT and digital transformation co-leader at the $7 billion Vaccine Business at Merck & Co., described how and why Merck is investing in digital transformation. The company’s executive team, which is committed to digital transformation, expects to achieve as much as $1 billion in overall savings, with $500 million of that coming from manufacturing. The four areas initially identified for digital transformation are:

• Product data management

• Predictive condition monitoring

• Technology-enabled laboratories

• Open standards

Product Data Management

Merck is in a fortunate position in that sales of the company’s top three products are constrained only by supply. If the company could make more of these products, it could sell more. This puts a lot of pressure on the manufacturing department to run “all out” and not drop lots or discard any material. “To help our manufacturing department and facilities run in that manner, we started this project focused on product data management,” Tung explained. The initiative includes integrating all data sources into the manufacturing data lake and implementing manufacturing analytics. The goal is to enable process engineers and data scientists to gain data-driven insights into the root causes of manufacturing problems and optimize processes to increase both yields and the potency of the company’s vaccines.

The shop floor data sources include the data historian, continuous historian, batch historian, laboratory information management systems (LIMS), and manufacturing execution system (MES). Inputs also include raw material and supply data. Ultimately, while largely siloed right now, these data will all feed into a common analytics platform, with trends and advanced analytics viewable on dashboards and self-service export capability so process engineers and data scientists alike will be able to put the data in a format needed for their jobs.

Figure 1 Moving currently siloed data into a data lake will help transform Merck’s employees into digitally enabled knowledge workers

Why Product Data Management Is So Important

Today, like many other companies, Merck relies heavily on Excel spreadsheets to organize and analyze its data. Exports from the data historian into these spreadsheets can make it difficult and time consuming for process scientists to get the insights needed. Much of the needed data is gathered manually, a tedious and time-consuming process. Furthermore, since the electronic data is stored (and siloed) in different systems, it is difficult for engineers to get and correlate the right information. The team wants to be able to review measurements across multiple batches to compare performance between contract manufacturing organizations, internally and externally across sites and across labs.

To overcome these hurdles, the company has begun to put data into data lakes for analysis, starting with the data from its highest-revenue-producing products. In the future, Merck will store all its data in a data lake. The company is making the data lake product-centric, but not focused on any one product since it wants to be able to add new products easily. Having the data in a data lake will:

• Help reduce “touch-time” and lead time through automation

• Support tools for troubleshooting and investigations

• Enable a better understanding of performance from samples using analytics

• Gain knowledge that can be applied to the process and optimize methods

The company wants to include both internal data and external data from suppliers and partners in its data lake. It is working out agreements to respect intellectual property that impacts the process.

Predictive Condition Monitoring

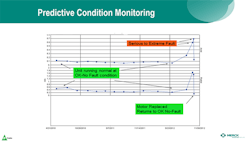

Merck’s predictive condition monitoring program has been in place for eight years now. The program uses condition monitoring technology and entails fixing equipment before it goes into catastrophic failure. The company has a lot of rotating equipment, including pumps, motors, and compressors. To maximize equipment uptime, Merck installs vibration sensors on the bearing mounts, collects this time-series data and, using analytics, examines the data for the peaks and frequencies associated with certain modes of equipment failure for rotating equipment. This enables the company to identify impending issues and predict over time when the compressor or other rotating equipment is likely to experience trouble.

Common failure modes are associated with bearings going bad, misalignment, and frequency domains. According to Tung, at certain peaks, the engineer can determine apparent failure modes. When a serious state is detected, it goes into an alert level. From there, the company has a closed- loop process in which its reliability engineers monitor the trends. When the trend indicates a moderate-to-severe probability of failure, the engineers plan the downtime needed to take the machine down, repair the problem, and fix the bearings to avoid catastrophic failure.

Correlating the advanced analytics to the process and equipment behavior requires significant domain knowledge. This is particularly true for understanding which frequencies are associated with different failure modes. According to Tung, machine learning algorithms alone are not enough.

In conjunction with appropriate signal processing and the domain knowledge of Merck’s experts, the machine learning algorithm predicts when a failure might occur. Tung notes that the company’s analytics platform can also correlate this to yield improvements. Merck also uses machine learning and correlative events to develop information models.

Figure 2 Predictive condition monitoring involves a combination of vibration sensors, frequency analysis, advanced analytics, and domain knowledge

Steam Trap Monitoring

Steam traps help protect costly equipment such as steam turbines by removing the non-condensing gases in the stream. However, the steam traps themselves have a high degree of failure and, when they fail, are large consumers of energy. To avoid this, Merck has installed wireless, IoT-connected sensors on steam traps located in geographically dispersed pipe racks. The company’s domain experts use the trended da-ta from these sensors to determine if the steam traps are failing or have already failed.

Technology-enabled Laboratory

Another major initiative at Merck is to use digitalization to improve the performance of its laboratories. The laboratory results help pharmaceutical manufacturers produce quality products. A recent Merck pilot involved using MES technologies in the laboratory to replace paper-based work instructions with electronic work instructions. The company is also introducing finite scheduling and planning and resource planning into its labs. Other digital initiatives include electronic specification management, chemical inventory management, and calibration management.

The company has other laboratory projects based on digital integrated training and lab analytics. To maintain a stable biopharmaceutical product, lab assays are used to release products. But manufacturing process and lab assay data can drift due to several factors. By obtaining more data from its lab assays and feeding the data into the analytics platform, the company can determine if it’s the laboratory or manufacturing issues that are causing the drift and out-of-spec products.

“Stealth Digital Strategies” and Open Standards

In addition to its process-oriented digital transformation elements, Merck is also working on what Tung referred to as “stealth digital strategies,” or open standards. These efforts include working with standards organizations for easier integration into the company’s manufacturing and laboratory activities. A strong proponent of standards, Merck believes that by deploying, engaging, and adopting more standards it will drive productivity into the organization.

The company participates in many different standards organizations. Merck is a founding member of the Open Process Automation Forum (OPAF) that focuses on standard protocols. The company is also a founding member of the Allotrope Foundation which is similar to OPAF but focused on laboratory information. The company also participates in many industry groups that also develop standards. These include BioPhorum, PDA, and ISPE.

BioPhorum is collaborating with NAMUR in Germany to develop standard interfaces between standard data communication protocols and models. The collaboration includes end users, SIs, and equipment suppliers – all working together to develop a standard interface between intelligent equipment, unit operation skids, and different automation systems to simplify information exchange.

Merck is working with BioPhorum to establish interoperability using standard interfaces to process skids or unit operations. This includes developing standardized interfaces for three common unit operations: bioreactors, a filtration skid, and a chromatography column, each sold with different suppliers’ control systems. In the past, the company would write custom interfaces, which was time consuming and often caused problems after upgrades. The goal is to come together as an industry with equipment suppliers and other pharmaceutical manufacturers to establish a common communication protocol using OPC UA and data information models to get the information to the analytics platform for better and faster process insights.

The team’s vision is to run a batch from the standard supervisory system with phases executed at the unit operations level. The initial project was to get the bioreactor, filtration skid, and chromatography column to interoperate with the DCS, which could then be used to initiate phases.

Promoting Adoption of New Interfaces

To promote adoption of the new interface, Merck held a three-day workshop with its DCS and equipment suppliers. These included DCS suppliers Emerson, Siemens, and Rockwell Automation; and equipment suppliers Pall, Millipore, and Sartorius. The group worked on proofs of concept for the standard interface to develop the information model for a bioreactor. Ultimately, nine different interfaces were developed encompassing all possible connection permutations between the different DCSs and between the different equipment and DCSs.

As a result, the workshop proved out the concept of standard interfaces using open standards to replace custom interfaces, which could each involve eight to nine weeks of engineering time to develop. According to Tung, “This is an excellent example of how standards are driving productivity.” After the bioreactor unit operation is completed, the next unit operation targeted for standard interfaces will be normal flow filtration and chromatography.

JANICE ABEL is a principal consultant for ARC Advisory Group, Dedham, Mass. Email her at [email protected].