Delve Deeper into “Premature Failures” of Rupture Discs

Today’s processing plants generally include complex networks of sensors, monitors and computer processors. We’ve learned to believe what our monitors tell us and to rely on the data they report. Usually we should — but in some instances the data can mislead us. Attributing the bursting of a rupture disc to “premature failure” exemplifies this. In most cases, the disc actually has operated as intended to relieve an overpressure event but the available data aren’t good enough for us to see that such an event occurred.

This underscores the importance of understanding the limits of what our sensors can tell us, and what we can do to protect ourselves from the things they can’t. Our networks are powerful but bad things still happen to good systems. We still get caught unawares by overloads and can’t open a valve fast enough or disable a feed line in time to prevent damage. How can we avoid such issues?

[callToAction ]

Measurement Basics

The ability of a system to react properly depends upon having adequate and appropriate data available. Sensors provide these data to control systems. So, let’s quickly review some key facts about the sensor, sampling and resolution, and data reporting.

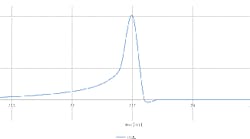

Figure 1. Data show that pressure spike reached about 16 psig.

The sensor. This is simply a device that responds in a predictable way to some phenomenon. Various factors affect its performance. For instance, a sensor requires a certain minimum time to get a reading, which limits its speed of response — in the case of a typical thermocouple, it may take a number of seconds to respond to changes in temperature. Changes occurring faster than the sensor can read will produce skewed results or an average rather than true data.

Another limiting factor of many sensors is accuracy, i.e., the closeness of the reported value to the true one. The sensor’s construction and calibration can affect its accuracy. Construction usually sets sensing range and repeatability. For example, consider a pressure sensor that has a membrane whose deflection is measured to indicate pressure. A very thin membrane will be quite responsive to small changes in pressure, deflecting a large amount (typically in a less-than-linear fashion). This can improve accuracy but restricts the upper pressure the sensor can handle. On the other hand, a thick membrane might deflect very little over a relatively large pressure range, creating an accuracy issue when the differences in pressure are small.

So, pay attention to the sensor’s accuracy specification if one is provided, and note whether it relates to the entire range or the reading. (To get a sense of the impact of the different bases, see: “Pressure Gauges: Deflate Random Errors.”)

Make no mistake, a sensor will report some value. However, it’s important to understand exactly what that value means in the context of your system. In the end, the output of most sensors is a signal already laden with caveats as to what its amplitude really reveals.

Sampling and resolution. Something must sample the signal generated by the sensor and record the data values. Sampling involves two pieces, the rate and the resolution. Sampling rate is simply the number of times a signal is read and recorded over a certain interval. Resolution indicates the smallest incremental value that you should treat as meaningful. So, for instance, for a pressure gauge with a resolution of 2 psi, don’t worry about differences less than that. Most systems will round the value to the nearest significant number to prevent “false interpretations.”

As discussed above, the sensor itself constrains the sampling rate. However, the device doing the sampling, such as an analog/digital converter, also imposes constraints, both mechanically and due to downstream devices such as controllers or recorders and their associated needs.

Data reporting. Once data are sampled, the reporting signal itself may exhibit some cyclic characteristics. In addition, you must consider the way in which the data are compiled, graphed and analyzed. For instance, let’s compare daily weather temperature cycles to vehicle motor rpm. The daily temperature cycle obviously is slow, so a sample rate of only once per hour suffices for relatively detailed data and results. In contrast, a small car engine may run at thousands of rpm, requiring a much higher sample rate for ample data coverage. In cases like this, systems typically sample in the 1–2 times/sec range and then may average the readings before reporting. So, adequately monitoring the engine involves analyzing a massively greater volume of data. Moreover, interpreting these data requires properly accounting for significant interactions that can impact engine rpm; small changes in conditions can affect the reported values drastically if sample rates aren’t taken into account. Various industries use different sampling and reporting strategies to manage this.

Figure 2. This plot indicates a much less severe pressure spike.

Signal processing really comes down to how many points we get, how accurate they are and what we do with the space in between them. The way data are reported can have important consequences. Let’s illustrate this with three graphs of an event — first the true values, then the averages, and finally the maximum values.

Figure 1 shows the true pressure values for a system measured over a period of time. In this case, the data yield a mathematical function that increases quickly over a second. The graph plots ten data points per second.

Frequently, however, the data are processed by averaging or maximum value schema.

Figure 2 results from averaging the same ten data points per second. Averaging often is used to filter out electrical noise. When confronted with a signal spike (high intensity, short duration), averaging will smooth relevant magnitudes out of the graph, causing under-reported values. The error increases as sample speed decreases, reminding us of the importance of sampling rate. The shorter the spike duration and the lower the sample rate are, the more dramatic the effect. The pressure spike shown in the true value plot reaches about 16 psig but the high value is only about 7 psig once averaged. Averaging is quite common — keep that in mind when analyzing data yielded by some unusual system event.

Figure 3 uses the same data but plots the maximum values. This approach can be useful for capturing events that occur over a short period of time. However, it may work poorly for controlling a process. The approach will retain all electrical noise and likely will over-report the values in the system, leading to conservative responses to all events. While this may offer more assurance of avoiding catastrophe, it also may prompt unnecessary and costly shutdowns, slowdowns, etc. The maximum-value retention scheme is more common in testing and laboratory setups.

What this means is that when an overload occurs, records can be woefully inadequate for accurately capturing and reporting the overload value or the circumstances that led to it.

Skewed results can mislead us about the severity of a situation, impairing our ability to react appropriately and to prevent future occurrences. This puts lives and assets at greater risk.

Anticipating Abnormal Circumstances

Bear in mind that design engineers when configuring most systems to monitor and record processes only consider conditions deemed reasonably likely. For instance, take a system that must open a valve to relieve pressure in a tank. Under normal circumstances, the pressure rises at a rate of only a few psi per hour. In such a case, a sampling rate of a few times per minute may suffice. However, under abnormal circumstances, say in the event of a runaway reaction or fire, a much faster rate of pressure rise may occur.

Figure 3. This graph can prove useful for analyzing short-duration events.

Such an event might render the normal operating sample rate useless and inaccurate, especially if results are averaged, as discussed above. This potentially can cause a catastrophic failure because the system may not register or report the rise to give enough time to react. Even when the signal is detected, the reported value, if averaged, likely will dramatically underestimate the rise, as we have demonstrated. When reviewed later, the record would indicate a pressure significantly under the rated working limits of the tank.

This type of failure in the measurement system is a consequence of sampling at a rate below what is called the Nyquist frequency (~2× maximum frequency present). Generally speaking, this is the minimum sample rate required to avoid introducing distortion into the reported data. If sampling occurs at 2× the Nyquist frequency, it’s possible to accurately reconstruct the input signal. If the sample rate is below the Nyquist frequency, regenerating a true representation of the scenario without distortion is impossible. It can be difficult to even determine the Nyquist frequency for a spike event.

Increasing the sampling rate could rectify portions of this problem. However, doing so multiplies the amount of data captured and, in many cases, can be expensive. Remember too, these events shouldn’t happen but we are forced to prepare because they could and do. That said, most systems are designed to handle what should occur. The important point to take away is that our systems are limited in what they can witness and you should view their output of data with that in mind.

Complexities of Troubleshooting

Consider the following example: If plugging two waffle irons into an electrical outlet will blow a fuse (a safety device), the solution is not to switch to a bigger fuse. That would put the system at risk of an overload that could have catastrophic consequences. When a safety device activates, in our experience it’s useful to assume it worked as intended and then follow that logical assumption to its conclusions. For instance, remember the valve and tank discussed above. If the valve activated, this means, at minimum, that the valve reached its set pressure. If review of the pressure data for the tank shows a pressure below the tagged set pressure, the first question is how much the two pressures differ. A difference of a few percentage points indicates a controls problem. Something might be activating too slowly, running too close to the safety limits, heating too fast, etc. On the other hand, if the pressures are very different (~20% or more), this means that whatever activated the valve did so quickly. The greater the difference (error) is, the faster the event (recall the averaging discussion above) becomes. Such information alone can be quite illuminating because only a limited number of overpressure events can happen.

This is simply another clue in the mystery of any system event. Remember this analysis assumes the safety device activated properly. This isn’t always the case, though. In some circumstances, a safety device may fail at a value other than intended — usually because an environmental condition compromised the device. Potential causes include cycling, corrosion or simple wear-and-tear from exposure to the elements. Safety devices have their limitations as well, and must be specified properly.

As discussed, the first two limitations to any system center around the physical components, the sensors and the signal processing. Next comes the control system. It can get incredibly complex very quickly. Just managing the sequence of expected events can be a daunting task. Let’s look at a relatively simple process like preparing oatmeal in an automatic pressure cooker. The inlet lets the fluid into the system. The system then heats the oatmeal for 20 minutes and finally drains the oatmeal into a bowl. While seemingly simple, consider for a moment all the complexities that occur very quickly. When opening the fill valve, you must make sure the drain is closed and the vent is open, otherwise the oatmeal won’t go into the tank because the air can’t displace. Then, how do you tell when the tank is full? You may have to add a sensor to measure the level, flow or weight of the tank to determine this. Once full, you have to close the vent and fill tap before heating the tank. Do you need to monitor the pressure to control the amount of heat applied? Once cooking is complete, you must open the vent to let off steam before opening the drain. How long do you wait before opening the drain? Once the drain is opened, how do you know when the bowl is full? How long can you leave the oatmeal in the tank?

These are all the events we know are going to happen — in a relatively simple system and process. Still, each requires another sensor, sampler and control to manage it. Now what happens when a valve gets clogged, somebody puts the wrong kind of oatmeal into the fill pipe or the heater doesn’t turn off? These events aren’t expected but potentially can destroy the equipment. We can’t expect a system designer to accommodate every possible scenario!

The Role of Passive Protection

Typically, we can address the limitations of a control system by installing protection devices that will absorb an unexpected circumstance without destroying the plant. There are numerous examples of protection devices — fuses, safety relief valves, circuit breakers, rupture discs, frangible bulbs, among many others. These usually are physically or mechanically responsive and require no power or intervention to activate. Such devices frequently can be installed with little or no support structure. Additionally, when designed into the system from the outset, they often can simplify control systems and passively protect equipment and personnel. As an example, a relief valve might serve to prevent a tank from going into a vacuum condition. These devices may provide a cost-effective and easier-to-install alternative where adding controls and active devices could be expensive or impossible if the system is already in place and the overload unanticipated by the original design.

Activation of safety devices should happen relatively rarely. When an activation does occur, it typically leads to inquiries and data dumps to try to find out what happened. Vendors are asked relatively often why a passive device “failed” early. Recall the scenario we’ve already covered where the tank pressure rose at a high rate but the data recorder showed pressure lower than the actual. In reality, the device may have activated precisely when it should have. However, the equipment failed to interpret the data correctly, giving the illusion that the device “failed prematurely.” This happens all the time.

In fact, if you take the scenario above, you can predict the data always will appear as though a device broke early. After all, the opposite scenario can’t occur if the safety device is properly calibrated because it would activate otherwise. This matches our reality, as makers of such devices are governed by a number of code requirements designed to ensure the accurate manufacture of their products. When confronted with these apparent “premature activations,” a customer may try to raise the operation limits of the safety device as a solution to an apparent “early” device activation that resulted in an unanticipated system shutdown. This isn’t a good solution, though, as it is born of faulty assumptions regarding the reported values.

The reality is that we’re not good at anticipating all the possible scenarios that can cause a control system failure. We can sense, monitor and control the systems but we can’t protect them very well in that way alone. What we can do is put well-specified devices into place that, in the event of a systems failure, will protect the plant and the people inside it, no matter what data are reported. The real value in these devices stems from taking a failure that might cost human lives and millions of dollars and turning it into a manageable event. Bad things are going to happen, even to our best systems. We can safeguard them, though, and hopefully learn from any events to protect ourselves even better in the future.

ERIC GOODYEAR is a senior engineer at Oseco, Broken Arrow, Okla. Email him at [email protected].