Any discussion about the use of plant operating data with plant management, operational staff or suppliers of plant information management systems will quickly spawn one of these clichés: “We need to make better use of data,” “We need easier access to the data,” “We need to get the right data into the hands of the right people, so they can make the right decisions,” “Don’t just give me more data, give me more knowledge.” The clichés are right. We do need to improve our ability to make efficient use of our automation and plant information data system investments. But the questions are how and where is the value truly being delivered to the organization?

This call to action is being driven by reductions in resources, increased desire to maximize capacity utilization, the need to optimize operational performance, and to ensure that we are in compliance with company goals, targets and corporate responsibilities. To put the challenge in perspective, Figure 1 shows the number of refineries in North America and their crude processing capacity. Clearly, we are being asked to do more with less. Data management is an essential element of the solution to this challenge.

Figure 1. Number of refineries and their capacity from 1949 to 2004 (click to enlarge)

Data to knowledge

Over the last 20 years, the chemical, oil and gas industries have invested heavily in automation and plant information systems such that the data are now accessible. As a result, we should now be able to put them to productive use. Or can we? The challenge with raw data, no matter how accessible, are that they are just data, and data still requires a lot of work before they can be turned into knowledge. In most cases, the data need to be validated, analyzed and converted into knowledge that are actionable. And this can still require a significant investment of time and resources.

The Key Performance Index (KPI) has been the first step in putting data into a context that is more aligned with organizational goals. Every plant functional group has high-level objectives and targets, and if the raw operational data can be converted in real-time or near real-time into these KPIs, then non-compliance to operational targets can be quickly identified and decisions can be made (see CP, October, p. 37) . But while converting these data into contextualized KPIs is a necessary first step, this alone does not guarantee the desired operational improvements. If the KPIs themselves are not managed effectively, companies often simply transform the problem of “data overload” into the problem of “KPI overload”.

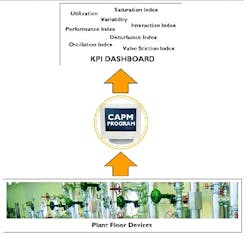

Consider the application of Control Asset Performance Management (CAPM).

In the chemical, oil and gas industries, 75% of a plant’s physical assets are under some form of automation or process control. Companies are now focused on optimizing control performance to improve plant performance. Typical results are tempting: capacity can be increased by 3% to 5%, with little or no additional capital investment. What is required instead is an investment of intellectual capital. The objective of the CAPM program is to automatically collect the raw data from the distributed control systems (DCSs) and covert these raw data into higher level KPIs. Most CAPM programs will convert real-time measurements of controller operating mode, present value, set point, and output into daily KPIs such as variance index, oscillation index, valve stiction (stickiness) index, utilization index, economic performance index, etc (Figure 2). As a result, it is now much easier to understand whether the control system is performing optimally by monitoring these high level utilization and performance-based KPIs.

Figure 2. Raw data to performance metrics.

The consolidation of raw data into KPIs or performance metrics is a necessary first step. If not managed carefully, though, it will simply change the nature of the problem. If we consider the CAPM example above, a plant faced with the challenge of monitoring and sustaining the performance of 1,000 control loops may find it difficult to act on the results of 1,000 KPIs per day. The transformation of data into KPIs alone seldom delivers the true improvements we’re looking for.

The need for visualization

The visualization layer is an essential element to getting the value from any KPI-based monitoring system. We have all seen the promises of the “digital dashboard” and speedometer-like displays of plant efficiency delivered in real-time though a web-based environment. But the true power of the visualization layer is its interactive ability to quickly sort and display the consolidated performance metrics; high priority requirements are highlighted and guidance is provided on actions required.

The visualization layer is developed through a combination of filtering, sorting and drill-down-type analysis techniques. This forms its own layer, the automation layer, between data collection and visualization. More sophisticated visualization techniques, such as Treemap Technology, are now available. These techniques allow users to visualize hundreds of assets in a single view and rapidly identify the key focus areas. Treemap, and similar systems, represent a step change in our ability to rapidly act on the information presented within a KPI-based environment.

CAPM studies have shown that well-designed KPIs combined with powerful visualization techniques can allow plant personnel to improve the identification of high-priority automation problems by 100%. More importantly, they can complete the task in less than 10% of the time required when using traditional analysis techniques. Figure 3, shows examples of both the sorting/filtering and Treemap visualization layers applied to CAPM.

Figure 3. CAPM application with data visualization tools.

What about workflow?

By today’s standards in the chemical, oil and gas industries, any company with a real-time, web-based KPI environment for operational views and decision-making is considered a pacesetter in its effective use of data. So has the “real-time web-based enterprise” delivered on the promises? And what’s the next step for these pacesetters?

To deliver the full value that these systems promise, the meaningful knowledge they generate must be acted upon. This requires integration with the plant’s workflow processes. The consolidation of data to KPIs and its visualization often retains a data-centric view that still places it in a functional silo. If we consider CAPM again, many systems provide performance metrics that are only accessible to the control engineers. In effect, all the information is channeled though a human funnel before it is dispatched more widely. This model (Figure 4) does not empower the organization nor facilitate better work processes. We must move from a data-centric view to a functional “process-centric” view, where the system can directly support the higher-impact business processes.

Figure 4. Information control limits integration.

Consider the case of a poorly performing slide valve on a refinery fluidized catalytic cracking (FCC) unit. Slide valves control the flow of catalyst to the reactor. Poor performance, due to valve wear or mechanical complications, can cause serious process upsets and a possible unit trip. A traditional CAPM program would only consolidate the valve and control data into performance metrics for the control engineer to review. Now, let’s look at this situation from a functional or business process perspective. A poorly operating slide valve has a significant impact on the operation of the entire refinery and should directly impact decisions made by the following functional roles:

- Maintenance must understand the maintenance requirements, risk of failure and the priority level of the valve. Maintenance planning may need to go into over-drive, accelerating future maintenance. The reliability of the valve, the FCC, and the refinery may need to be re-evaluated.

- Operations must be comfortable interpreting valve performance such as the rate of degradation through both CAPM and alarm information There must be knowledge of the risk of unit trip and its consequences (e.g., environmental and safety), and procedures for managing the valve problem.

- Control must be aware of the effect the degradation in valve performance will have on the overall unit control system. Procedures must be established for root cause analysis of the valve and bypassing, or disabling, parts of advanced control systems to continue unit operation for a soft-landing (planned shutdown).

- Process engineering must assess the impact on overall unit performance, future capital projects, and the overall cost associated with poor control. In addition, if valve, or plant reliability decline, safety may need to be assessed (HAZOP study).

- Planning and scheduling must appreciate the consequences of a valve problem on inventories (feedstock and product) and future production plans. Customer expectations may need to be managed and alternative supplies may need to be tapped. Plans may need to be made to delay feedstock deliveries, particularly for dated or hazardous materials.

- Management must forecast the effects of a potential economic loss due to reduction in unit performance, product quality, and a potential plant shutdown.

This necessary distribution of knowledge requires an understanding of various relevant functional roles, but also requires integrating several data sources or knowledge bases. Figure 5 shows both the data and workflow requirements for this to happen.

Figure 5. Integrated decision making.

Although the refinery FCC unit slide valve example may be an extreme case, it demonstrates the need to understand the overall data and workflow requirements if these systems are expected to support business processes and deliver their full return on investment. Without workflow integration, the promises of the integrated operating environment will always exceed the reality delivered.

Enabling the workflow processes

Collaborative Production Management (CPM) is often defined as a method to unify disparate systems to achieve operational excellence. This unification must be performed along two lines. We must combine both the data/information layer as well as the functional layer into a single workflow environment. This will allow plant people, from operators to managers, to get away from complicated workflows where they must work with multiple systems to assess situations and perform tasks. CPM enables collaboration and helps the different people work together with an understanding of their specific requirements in the context of a view of the bigger picture.

Let’s consider the refinery FCC unit slide valve malfunction. Figure 5 shows the data/knowledge integration requirements and the functional user-level integration . The sharing of the data ensures that each functional group in the plant understands the operational situation and its role in making improvements. In essence, this is the integration needed to truly deliver on the promises of collaborative manufacturing.

Although the focus here is on the operational level, the challenge is even greater as you move up into the planning, or logistics layer. The disparate databases, the financial impact of decision-making and abundance of custom calculations, based on individually-created spreadsheets, often produce a chaotic environment. The proper planning of CPM requirements follows the same principles as at the operational level and can yield even greater benefits.

Most companies that set out to achieve operational excellence through a web-enabled real-time enterprise platform hope that it delivers a collaborative production management environment. For companies that are successful, the benefits to the organization are significant. Implementation changes the way people work. Typical benefits include:

- improved capacity utilization (3% to 5%);

- increased equipment reliability (5% to 8%);

- optimized production of higher value components through yield upgrades (8% to 12%);

- better compliance reporting (environmental and safety);

- enhanced efficiency and productivity of staffing (10% to 50%); and

- higher energy efficiency (5% to 15%)

The next step: exception-based management

So what does the future hold for CPM? The push forward will not end with integrating real-time information seamlessly into streamlined workflow. Rather, decision-makers will push for even greater efficiency by minimizing the time people spend asking questions and monitoring KPIs. Users will quickly learn when things are going wrong, and when they are going very well. An information system, based upon pre-determined business targets and logic, will alert users of non-compliance to goals. Such a system will give decision-makers insight into the situation, as well as an action plan to resolve the problem. Finally the system will track each non-compliance item through to resolution, ensuring it is dealt with in a timely manner.

We have all heard the promises of how more data and more knowledge will deliver significant benefits to plant operations. But before we embark on building the real-time enterprise and providing seamless access to every piece of data, it is important to understand where and how value is delivered. Data access, KPI generation, digital dashboards, web-based visualization and a collaborative workflow environment are all essential pieces of the puzzle. We also need to walk before we run. Understanding the stages and having a strong vision of where you need to go are essential first steps in adopting a staged approach to a successful CPM System.

Mike Brown is vice president, technology at Matrikon, Edmonton, Alberta. E-mail him at [email protected].